This is the complete version of my keynote lecture that opened the Computer Human Interaction (CHI) Congress in The Hague in 2000.

What happens to society when there are hundreds of microchips for every man, woman and child on the planet? What cultural consequences follow when every object around us is ‘smart’, and connected? And what happens psychologically when you step into the garden to look at the flowers – and the flowers look at you?

My talk this morning has four parts.

First, I will talk about where we are headed – right now. I’ll focus on the interaction between pervasive computing, on the one hand, and our social and cultural responses to technology, and increased complexity, on the other.

The second part of my talk is about what I call our innovation dilemma. We know how to do amazing things, and we’re filling the world up with amazing systems and devices. But we cannot answer the question: what is this stuff for? what value does it add to our lives?

The third part of my talk is about the new concept of experience design – and why it is moving centre stage as a success factor in the new economy. Experience design presents designers and usability specialists with a unique opportunity; but I will outline a number of obstacles we need to overcome if we are to exploit it.

I conclude with a proposed agenda for change, which I package as, ‘Articles of Association Between Design, Technology And People’!

Where we are headed

So my first question is this: where are we headed? I want to start with this frog and the story, which many of you will have heard before, about its relationship with boiling water. You remember how it goes: if you drop a frog into the pan when the water is boiling, it will leap out pretty sharpish.But if you put the frog into a pan of cold water, and then heat it steadily towards boiling point, the frog – unaware that any dramatic change is taking place – will just sit there, and slowly cook.

The frog story is one way to think about our relationship to technology. If you could drop a 25-year-old from the year 1800 straight into the bubbling cauldron of a western city today, I’m pretty sure he or she would leap straight back out, in terror and shock. But we, who live here, don’t do that. We have a vague sensation that things seem to be getting warmer and less comfortable – but for most of us, the condition of ‘getting warmer and less comfortable’ has been a constant throughout our lives. We’re used to it. It’s ‘natural’.

But is it? Preparing this lecture required me to step back for a moment to get a clearer view of the big picture. This really was quite a shock. It’s not so much that technology is changing quickly – change is one of the constants we have become used to. And it’s not that technology is penetrating every aspect of our lives: that, too, has been happening to all of us since we were born. No: what shocked me was the rate of acceleration of change – right now. It’s as if the accelerometer has disappeared off the right-hand side of the dial. From the point of view of a frog sitting on the edge of the saucepan – my point of view for today – the water has started to steam and bubble alarmingly. What does this mean? Should I be worried?

One aspect of the heating-up process is that many hard things are beginning to soften. Products and buildings, for example, which someone so insightfully described as ‘frozen software’. Pervasive computing begins to melt them.

Let me explain.

I borrowed this picture from an ad by Autodesk because it so neatly hints that almost everything man-made, and quite a lot made by nature, will soon combine hardware and software. Ubiquitous computing spreads intelligence and connnectivity to more or less everything. Ships, aircraft, cars, bridges, tunnels, machines, refrigerators, door handles, lighting fixtures, shoes, hats, packaging. You name it, and somone, sooner or later, will put a chip in it.

Whether all these chips will make for a better product, is one of the questions I want to discuss with you this morning. Look, for example, at the list of features on a high-end Pioneer car radio. Just one small product. There would be hundreds like it on the on the city street we just saw. Shall I tell you a strange thing? There’s no mention, on this endless list of features and functions, of an on-off switch! This car radio is about as complex as a jumbo jet. They also don’t have an on-off switch, as I discovered the first time I asked a 747 pilot to show me the ignition key.

Speaking of jumbos, I saw a great cartoon in the New Yorker depicting a 747 pilot, sitting in sitting-back interaction mode with a PDA in his hand: the caption says, “That’s cool: I can fly this baby through my Palm V.”

Our houses are going the same way, crammed full of chips and sensors and actuators and God knows what. And to judge by this picture increasingly bloated and hideous. Why is it that all these “house of the future” designs are so ghastly?

Increasingly, many of the chips around us will sense their environment in rudimentary but effective ways. The way things are going, as Bruce Sterling so memorably put it, “You will look at the garden, and the garden will look at you.”

The world is already filled with eight, 12, or 30 computer chips for every man, woman and child on the planet. The number depends on who you ask. Within a few years – say, the amount of time a child who is four years old today will spend in junior school – that number will rise to thousands of chips per person. A majority of these chips will have the capacity to communicate with each other. By 2005, according to a report I saw a couple of days ago, nearly 100 million west Europeans will be using wireless data services connected to the Internet. And that’s just counting people. The number of devices using the Internet will be ten or a hundred times more.

This explosion in pervasive connectivity is one reason, I suppose, why companies are willing to pay billions of dollars for radio spectrum. In the UK alone, a recent auction of just five bits of spectrum prompted bids totalling $25 billion. That’s an awful lot of money to pay for fresh air. It prompts one to ask: how will these companies recoup such investments? What’s to stop them filling every aspect of our lives with connectivity in order to recoup their investment?

The answer is: not a lot. We hear a lot in Europe about wired domestic appliances, and I can’t say the prospect fills me with joy. Ericsson and Electrolux are developing a refrigerator that will sense when it is low on milk and order more direct from the supplier. Direct from the cow for all I know! I can just see it. You’ll be driving home from work and the phone will ring. “Your refrigerator is on the line”, the car will say; “it wants you to pick up some milk on your way home”. To which my response will be: “tell the refrigerator I’m in a meeting.”

But pervasive computing is not just about talking refrigerators, or beady-eyed flowers. Pervasive means everywhere, and that includes our bodies.

I’m surprised that the new machines which scan, probe, penetrate and enhance our bodies remain so low on the radar of public awareness. Bio-mechatronics, and medical telematics, are spreading at tremendous speed. So much so, that the space where ‘human’ ends, and machine begins, is becoming blurred.

There’s no Dr Frankenstein out there, just thousands of creative and well-meaning people, just like you and me, who go to work every day to fix, or improve, a tiny bit of body. Oticon, in Denmark, is developing hundred-channel amplifiers for the inner ear. Scientists are cloning body parts, in competition with engineers and designers developing replacements – artificial livers and hearts and kidneys and blood and knees and fingers and toes. Smart prostheses of every kind. Progress on artificial skin is excellent. Tongues are a tough challenge, but they’ll crack that one, too, in due course.

Let’s do a mass experiment. I want you to touch your self somewhere on your body. Yes, anywhere! Don’t touch the same bit as the person next to you. Whatever you’re touching now, teams somewhere in the world are figuring out how to improve it, or replace it, or both. Thousands of teams, thousands of designs and techniques and innovations.

And this is just to speak of stand-alone body-parts. If any of these body parts I’ve mentioned has a chip in it – and most of them will – that chip will most likely be connectable. Medical telematics is one of the the fastest growing, and probably the most valuable, sector in telecommunications – the world’s largest industry. There’s been a discussion of patient records, and privacy issues; and the media are constantly covering such technical marvels as remote surgery.

But we hear far less about connectivity between monitoring devices on (or in) our bodies, on the one hand – and health-care practitioners, their institutions and knowledge systems, on the other. But this is where the significant changes are happening. Taking out someone’s appendix remotely, in Botswana, is no doubt handy if you’re stuck there, sick. But that’s a special occurrence.

Heart disease, on the other hand, is a mass problem. It’s also big business. Suppose you give every heart patient an implanted monitor, of the kind shown here. It talks wirelssly to computers, which are trained to keep an eye open for abnormalities. And bingo! Your body is very securely plugged into the network. That’s pervasive computing, too.

And that’s just your body. People are busying themselves with our brains, too. Someone already has an artificial hypocampus. British Telecom are working on an interactive corneal implant. BT, which spends $1 million an hour on R&D – or is it a million dollars a minute, I forget – are confident that by 2005 its lens will have a screen on it, so video projections can be beamed straight onto your retina. In the words of BT’s top tecchie, Sir Peter Cochrane, “You won’t even have to open your eyes to go to the office in the morning.” Thankyou very much, Sir Peter, for that leap forward!

By 2010, BT expect to be making direct links to the nervous system. This picture shows some of the ways they might do this. Links to the nervous system – links from it. What’s the difference? Presumably BT’s objective is that you won’t even have to wake up to go to the office…

It’s when you add all these tiny, practical, well-meant and individually admirable enhancements together that the picture begins to look creepy.

As often happens, artists and writers have alerted us to these changes first. In the words of Derrick de Kerckhove, “We are forever being made and re-made remade by our own inventions.” And Donna Haraway, in her celebrated Cyborg Manifesto, observed: “Late 20th-century machines have made thoroughly ambiguous the difference between natural and artificial, mind and body, self-developing and externally designed. Our machines are disturbingly lively, and we are frighteningly inert.”

Call this passive acceptance of technology into our bodies Borg Drift. The drift to becoming Borg features a million small, specialised acts. It’s what happens when knowledge from many branches of science and design converge – – without us noticing. We are designing a world in which every object, every building, – and every body – becomes part of a network service. But we did not set out to design such an outcome. How could it be? So what are we going to do about it?

This is the innovation dilemma I referred to at the beginning.

Innovation dilemma

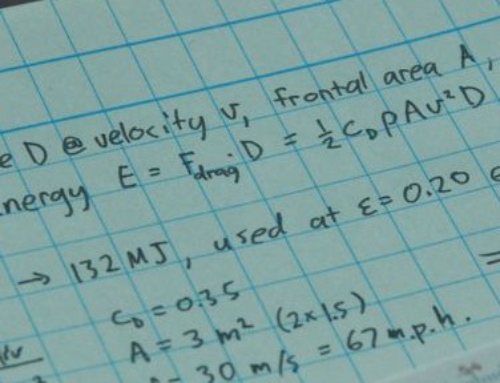

To introduce the second part of my talk, I made this diagram. Every CHI talk has to have a ‘big concept’ diagram – and I’m not about to buck the trend.

The innovation dilemma is simply stated: many companies know how to make amazing things, technically. That’s the top line in my chart: it keeps heading manfully upwards. It could just as easily apply to the sale of mobile devices, Internet traffic, processor speeds, whatever. Think of it as a combination of Moore’s Law (which states that processor speeds double and costs halve every 18 months or so) and Metcalfe’s Law (which states that the value of a network rises in proportion to the number of people attached to it).

The dilemma is that we are increasingly at a loss to understand what to make. We’ve landed ourselves with an industrial system that is brilliant on means, but pretty hopeless when it comes to ends. We can deliver amazing performance, but we find value hard to think about.

And this is why the bottom line – emotionally if not yet financially – heads south.

The result is a divergence – which you see here on the chart – between technological intensification – the high-tech blue line heading upwards – and perceived value, the green line, which is heading downwards. The spheroid thing in the middle is us – hovering uneasily between our infatuation with technology, on the one hand, and our unease about its meaning, and possible consequences, on the other.

I have decided to call this Thackara’s Law: if there is a gap between the functionality of a technology, on the one hand, and the perceived value of that technology, on the other, then sooner or later this gap will be reflected – adversely – in the market. You can judge for yourselves whether the Nasdaq’s recent downturn confirms Thackara’s Law, or not.

www.doorsofperception.com/img/chi/018.jpg

In this next slide I have re-labelled the value line as the carrying capacity of the planet. I know there’s nothing worse than being made to feel guilty by ghastly downwards-heading projections about the environment. As an issue, ‘the environment’ seems to be all pain, and no gain. My point is that although we may push sustainability – or rather, the lack of it – out of our conscious minds, we feel it nonetheless. I believe that the carrying capacity of the planet, and our background anxiety about technological intensification, are two aspects of the same cultural condition.

The green line on my chart describes a synthesis of environmental and cultural angst. The two lines are diverging because for far too long we’ve been designing things without asking these simple questions: what is this stuff for? what will its consequences be? And, are we sure this is a good idea?

User experience design

This brings me to the third part of my talk, where I connect the concept of an innovation dilemma to the business of this conference, “designing the user experience”, which seems to be a major preoccupation of the new economy. My question is this: what kind of experiences should we be designing? And how should we be doing it?

Another way to think about this question is by changing the lines on the chart. What products or services might we design which exploit booming technology and connectivity – which are not, after all, going to go away – while also delivering the social quality, and environmental sustainability, that we also appear to crave?

How, in other words, might we make that green line turn upwards? One way is to shift the focus of innovation from work to everyday life. People are by nature social creatures, and huge opportunities await companies that find new ways to enhance communication and community among people in their everyday lives. ‘Social computing’, in a word. Or rather, two.

Social communications often do not have a work-related goal, so they don’t get much attention from industry. Low-rate telephone charges probably explain the low priority given to social communications by TelCos in their innovation. But social communication occupies a large amount of time in our daily lives. About two-thirds of of our conversational exchanges are social chitchat. These are different from the ‘purposive’, or task-related communications, that feature in most telecommunication advertising. All those busy executives rushing around being – well, busy. Not to say obnoxious.

Social communication among extended families and social groups is a huge and largely unexplored market. I discovered just how big is the potential as a member of a project called Presence. Presence is part of an important European Union programme called i3 (it stands for Intelligent Information Interfaces). Presence addressed the question: ‘How might we use design to exploit information and communication technologies in order to meet new social needs?’ In this case, the needs of elderly Europeans. Presence brought together companies, designers, social research and human factors specialists, and people in real communities in towns in three European countries.

We learned a valuable lessons in Presence: setting out to ‘help’ elders, on the assumption that they are helpless and infirm, is to invite a sharp rebuff. Unless a project team is motivated by empowerment, not exploitation, these ‘real-time, real-world’ interactions will not succeed. Sentimentality works less well, we found, than a hard-headed approach. Our elderly ‘actors’ reacted better when we decided to approach them more pragmatically as ‘knowledge assets’ that needed to be put to work in the information economy. Old people know things, they have experience, they have time. Looked at this way, a project to connect eldely people via the Internet became an investment, not a welfare cost.

We also evolved a hybrid form of co-authorship during Presence. Telecommunication and software companies routinely give prototype or ‘alpha’ products to selected users during the development process. Indeed, most large-scale computer or communication systems are never ‘finished’ – they are customised by their users continuously, working with the supplier’s engineers and designers. In Presence, too, elderly people were actively involved, along with designers, researchers, and companies, in the development of new service scenarios.

Designing with, rather than for, elderly people raises new process issues. Project leaders have to run research, development, and interaction with citizens in parallel, rather than in linear sequence. We learned that using multiple methodologies, according to need and circumstance, works best: there is no correct way to do this kind of thing. The most pleasing aspect was the way that designers and human factors came – if not to love, then at least to respect – each other. Once you get away from either/or – and embrace and/and – things loosen up amazingly.

Presence also raised fascinating issues to do with the design qualities of so-called ‘hybrid worlds’. As computing migrates from ugly boxes on our desks, and suffuses everything around us, a new relationship is emerging between the real and the virtual, the artificial and natural, the mental and material.

Social computing of the kind we explored in Presence is unexplored territory for most of us. I can think of few limits to the range of new services we might develop if we simply took an aspect of of daily life, and looked for ways to make it better. I even found a list of common daily activities which have deep cultural roots, but which we can surely improve. I took the list from E. O. Wilson’s book, Consilience, in which he reflects on the wide range of topics that anthroplogists and social researchers have studied, in relative obscurity, for several decades.

To recap on the story so far: We face an innovation dilemma: we know how to make things, but not what to make. To resolve the innovation dilemma, we need to focus on social quality and sustainability values first, and technology second. And I described, through the example of the Presence project, how one might take one aspect of daily life, and make it better in using information technology as one of the tools.

Usability of any kind used to be either ignored completely, or treated as a downstream technical specialisation. Many of you know, better than I, what it is like to be asked to ‘add’ usability to some complex, and sometimes pointless, artefact – after everyone else has done their thing.

Today, all that is changing. In the new economy, we hear everywhere, the customer’s experience is the product! Logically, therefore, the customer’s experience is critical to the health of the firm itself!

A new generation of companies has burst onto the scene in a dramatic way over the last couple of years to meet this new challenge. They are a new and fascinating combination of business strategy, marketing, systems integration, and design. Their names are on the lips of every pundit, and on the cover of every business magazine. I thought it worth looking at a couple of these new companies.

In Scient’s discussion of user experience, the word architect has been turned into a verb, as in “The customer experience centre architects e-business solutions”. For Scient, customer experience design capabilities include information architecture, user interface engineering, visual design, content strategy, front-end technology, and usability research. Scient proclaims with gusto that “customer experience is a key component in building a legendary brand”. True to these beliefs, Scient hired a CHI luminary, John Rheinfrank. John has become the Hegel of user experience design with the wonderful job title of “Master Architect, Customer Experience”.

Over at Sapient, Rick Robinson, previously a founder of e-Lab in Chicago, has been appointed “Chief Experience Officer”. Rick is proselytising for “experience modelling” which, he promises, “will become the norm for all e-commerce applications”. Experience design, whispers Sapient modestly, will “transform the way business creates products and services . . . by paying careful attention to an individual’s entire experience, not simply to his or her expressed needs”.

The group called Advance Design is an informal, sixty-strong workshop, meeting once or twice a year, convened by Clement Mok from Sapient and Terry Swack at Razorfish, and featuring most of the luminaries in the New Age companies I referred to just now. I reckon that the energy and rhetorics of “user experience design” probably originated here in the group of pioneers.

I cannot end my quick excursion into the new economy without mentioning Rare Medium, whose line on customer experience design falls somewhere between the Reverend Jerry Falwell and the Incredible Hulk. Rare talks about “the creation phase” in a project, then go on to describe the the so-called “heavy lifting” stage of the engagement, before they segue back into the last phase of the Rare methodology, “Evolution’.

Agenda for change

Now, I’m teasing good people here. I’m probably jealous that nobody made me a “Master Architect of Customer Experience”. Some of the language used by these new companies about customer experience design is a touch triumphalist. But this focus on customer experience design is a major step forwards from the bad old days – that is, the last 150 years of the industrial age – when the interests of users were barely considered. Besides, it’s tough out there. The new economy does not reward shrinking violets. But it’s because design and human factors are now being taken more seriously, that we need to be more self-critical now – not less.

To be candid, I worry that by over-promoting the concept of “user experience design” we may be creating problems for ourselves down the line.

Language matters. Let me quote you the following words from an article about last year’s CHI: “The 1999 conference on human factors in computing posed the following questions: What are the limiting factors to the success of interactive systems? What techniques and methodologies do we have for identifying and transcending those limitations? And, just how far can we push those limits?”

Do these words sound controversial to you? Probably not. They describe what CHI is about, right? But those innocuous words make me feel really uneasy.

Take the reference to “human factors in computing”. The “success of interactive systems” is stated to be our goal – not the optimisation of computing as a factor in human affairs. Do you consider yourself to be just a “factor’ in the system? I don’t think so. But CHI’s own title states just that. Language like this is insidious. It’s not about the success of people, and not the success of communities – but the success of interactive systems!

We say we’re user-centred, but we think, and act, system-centered.

My critique of system-centeredness is hardly new. The industrial era is replete with complaints that, in the name of progress, we wilfully subjugate human interests to the interests of the machine. Remember Thoreau’s famous dictum that, “We do not ride on the railroad – it rides on us”? The history of industrialisation is filled with variations on that theme.

In a generation from now – say, when the child I mentioned earlier has her first child – what will writers say about pervasive computing? I believe we should try to anticipate the critics of tomorrow, now.

As Bill Buxton (a leading interaction designer) would say: usable is not a value; useful is a value. Making it easier for someone to use a system does not, for me, make it a better system. Usability is a pre-condition for the creation of value – but that’s something different.

The words creation of value are important. I do not mean the delivery of value. Users create knowledge, but only if we let them. I recommend an excellent book by Robert Johnson called User-Centered Technology for its explanation that most rhetoric about user experience depicts users as recipients of content that has been provided for them.

A passive role in the use of a system someone uses is the antithesis of the hands-on interactions by which we learn about the non-technological world. At the extreme dumb end of the spectrum, you find the concept of “idiot-proofing” – the idea that most people know little or nothing of technological system and are seen as a source of error or breakdown. To me, I’m afraid, it’s the people who hold those views who are the real idiots.

Many of you may disagree vehemently with this, but I believe hiding complexity makes things worse. Interfaces which mask complexity render the user powerless to improve it. If a transaction breaks down, you are left helpless, unable to solve what might be an underlying design problem.

An architecture of passive relationships between user and system is massively inefficient. I agree with the argument that if a thing is worth using, people will figure out how to use it. I would go further: in figuring out how to use stuff, users make the stuff better. I’ll return to this idea in a moment.

The casual assumption that only designers understand complexity is related to another danger: the denigration of place. ‘Context independence’ and ‘anytime, anywhere funtionality’ are, for me, misguided objectives. If we are serious about designing for real life, then real contexts have to be part of the process. User knowledge is always situated. What people know about technology, and the experiences they have with it, are always located in a certain time and place.

I would go further, and assert that ‘context independence’ destroys value. Malcolm McCullough, who wrote a terrific book called Abstracting Craft, is currently exploring ‘location awareness’ and has become critical of anytime/anyplace functionality. “The time has arrived for using technology to understand, rather than overcome, inhabited space”, he wrote to me recently; “design is increasingly about appropriateness; appropriateness is shaped by context; and the richest kinds of contexts are places.”

Putting the interests of the system ahead of the interests of people exposes us to another danger: speed freakery. “Speed is God; Time is the Devil”, goes Hitachi’s company slogan. We’re constantly told that survival in business depends on the speed with which companies respond to changes in core technologies, and to shifts in our environments. I tend towards a contrary view, that industry is trapped in a self-defeating cycle of continuous acceleration. Speed may be a given, but – like usability – it is not, per se, a virtue.

I believe we need to begin designing for multiple speeds, to be more confident and assertive in our management of time. Some changes do need to be speed-of-light – but others need time.

We have to stop whingeing about the pressures of modern life and do something about them. One way, I propose, is to budget and schedule time for reflection. Such ‘dead’ time or ‘re-booting’ time is important for people and organisations alike. We need to distinguish between time to market and time in market – a lesson I predict will be learned the hard way by many of the ‘pure-play’ dot coms. Yes, industry needs concepts, but it also needs time to accumulate value. Connections can be multiplied by technology – but understanding requires time and place.

CHI goes to Hollywood

You may well object that your work is complicated enough as it is, without being subjected to my flaky and unrealistic demands. I sympathise with the anxiety that involving users on a one-to-one basis would lead to ‘flooding’, and that nothing would ever get done.

But let’s try to re-frame the question. Let’s return to my suggestion that we replace the word ‘user’ with the word ‘actor’. I like the word actor because although actors have a high degree of self-determination in what they do, they do their thing among an amazing variety of other specialists doing theirs. There’s the writer of the screenplay, for example. The screenplay holds a film together. Without a screenplay, no film would ever get made. A movie also has an amazing array of specialised skills and specialisms – craft experts – such as the lighting and sound guys – and all those “best boys” and “gaffers” and “chief grips” – who know whatever it is that they do!

The Hollywood Model makes a lot of sense to me when thinking about the collaborative design of complex interactive systems. As an experiment, I put all the keywords and specialisms listed in the CHI conference programme into these credits for a complex interactive system I’ve called THING. On these credits are all the disciplines and approaches needed to make THING.

Let’s assume that that the producer of THING is a company. Companies have money, and they co-ordinate pojects. And we already agreed that people, formerly known as ‘users’, are the actors. The obvious question arises: who is the scriptwriter of THING? And who is the director?

I think the role of scriptwriter might possibly go to designers. Designers are great at telling stories about how things might be in the future. Someone has to make a proposal to get the THING process started. This picture, of a next- generation mobile ear device was designed by Ideo mainly to stimulate industry to think more broadly about wirelessness. One can imagine that such an image might trigger a large and complex project by a TelCo.

Like scriptwriters, designers tend to play a solitary rather than collaborative role in the creative process. Clement Mok (Chief Creative Officer of Sapient) put it rather well, in a magazine called Communications recently: “Designers are trained and genetically engineered to be solo pilots. They meet and get the brief, then they go off and do their magic.” Clement added that he thought software designers and engineers are that way too.

This suggests to me that, although designers should occupy the role of screenwriter, they should not necessarily be the director and run the whole show. Designers are not good at writing non-technological stories.

These sunglasses, also by Ideo, are a high-tech gadget whose function is to protect the wearer from intrusive communications. But in my opinion, you don’t protect privacy with gadgets, you protect it by having laws and values to stop people filling every cubic metre on earth with what Ezio Manzini so eloquently terms ‘semiotic pollution’. For me, gadget-centredness is the same as system-centredness – and neither of the two is properly people-centered. This is why designers are not, for me, eligible automatically to be the director of THING.

Don’t get me wrong. People do like to be stimulated, to have things proposed to them. Designers are great at this. But the line between propose and impose is a thin one. We need a balance. In my experience, the majority of architects and designers still think it is their job to design the world from outside, top-down. Designing in the world – real-time, real-world design – strikes many designers as being less cool, less fun, than the development of blue-sky concepts.

So who gets to be director of THING? I say: we all do. In the words of Nobel Laureate Murray Gell Mann, innovation is an ’emergent phenomenon’ that happens when there is interaction between different kinds of people, and disparate forms of knowledge. We’re talking about a new kind of process here – design for emergence. It’s a process that does not deliver finished results. It may not even have a ‘director.’

Perhaps we might think about the design of pervasive computing as a new kind of street theatre. We could call it the Open Source Theatre Company. Open Source is revolutionary because it is bottom-up; it is a culture, not just a technique. Some of the most significant advances in computing – advances that are shaping our economy and our culture – are the product of a little-understood hacker culture that delivers more innovation, and better quality, than conventional innovation processes.

Open Source is about the way software is designed and, as we’ve seen, ‘software’ now means virtually everything. Computing and connectivity permeate nature, our bodies, our homes. In a hybrid world such as this, networked collaboration of this kind is, to my mind, the only way to cope.

ARTICLES OF ASSOCIATION BETWEEN DESIGN, TECHNOLOGY, AND THE PEOPLE FORMERLY KNOWN AS USERS

\

The interaction of pervasive computing, with social and environmental agendas for innovation, represents a revolution in the way our products, our systems are designed, the way we use them – and how they relate to us.

Locating innovation in specific social contexts can, I am sure, resolve the innovation dilemma I talked about today. Designing with people, not for them, can bring the whole subject of ‘user experience’ literally to life. Looked at in this way, success will come to organisations with the most creative and committed customers (sorry, ‘actors’).

The signs of such a change are there for all to see. Enlightened managers and entrepreneurs understand, nowadays, that the best way to navigate a complex world is through a focus on core values, not on chasing the latest killer app. (This picture illustrates the core values of the French train company, SNCF).

Business magazines are full of talk about a transition from transactions, to a focus on relationships. We are moving from business strategies based on the ‘domination’ of markets, to the cultivation of communities. The best companies are focussing more on the innovation of new services, and new business models, than on new technology per se. They are striving to change relationships, to anticipate limts, to accelerate trends.

As designers and usability experts we need to study, criticise and adapt to these trends. Not uncritically, but creatively.

To conclude my talk today, I have drafted some “Articles of Association Between Design, Technology and The People Formerly Known As Users”. Treat them partly as an exercise, partly as a provocation. They go like this.

Articles of Association Between Design, Technology and The People Formerly Known As Users

Article 1:

We cherish the fact that people are innately curious, playful, and creative. We therefore suspect that technology is not going to go away: it’s too much fun.

Article 2:

We will deliver value to people – not deliver people to systems. We will give priority to human agency, and will not treat humans as a ‘factor’ in some bigger picture.

Article 3:

We will not presume to design your experiences for you – but we will do so with you, if asked.

Article 4:

We do not believe in ‘idiot-proof’ technology – because we are not idiots, and neither are you. We will use language with care, and will search for less patronising words than ‘user’ and ‘consumer’.

Article 5:

We will focus on services, not on things. We will not flood the world with pointless devices.

Article 6:

We believe that ‘content’ is something you do – not something you are given.

Article 7:

We will consider material end energy flows in all the systems we design. We will think about the consequences of technology before we act, not after.

Article 8:

We will not pretend things are simple, when they are complex. We value the fact that by acting inside a system, you will probably improve it.

Article 9:

We believe that place matters, and we will look after it.

Article 10:

We believe that speed and time matter, too – but that sometimes you need more, and sometimes you need less. We will not fill up all time with content.

Which is good a moment as any, I think, for me to end. Thank you for your attention.

(This text was John Thackara’s keynote speech at CHI2000 in The Hague. CHI is the worldwide forum for professionals who influence how people interact with computers. 2,600 designers, researchers, practitioners, educators, and students came to CHI2000 from around the world to discuss the future of computer-human interaction.)